Strong Migrations

Catch unsafe migrations in development

:tangerine: Battle-tested at Instacart

Installation

Add this line to your application’s Gemfile:

gem 'strong_migrations'

How It Works

Strong Migrations detects potentially dangerous operations in migrations, prevents them from running by default, and provides instructions on safer ways to do what you want.

Dangerous Operations

The following operations can cause downtime or errors:

- [+] removing a column

- [+] adding a column with a default value

- [+] backfilling data

- [+] adding an index non-concurrently

- [+] adding a reference

- [+] adding a foreign key

- [+] changing the type of a column

- [+] renaming a column

- [+] renaming a table

- [+] creating a table with the

forceoption - [+] setting

NOT NULLon an existing column - [+] adding a

jsoncolumn

Optional checks:

- [+] removing an index non-concurrently

Best practices:

- [+] keeping non-unique indexes to three columns or less

The Zero Downtime Way

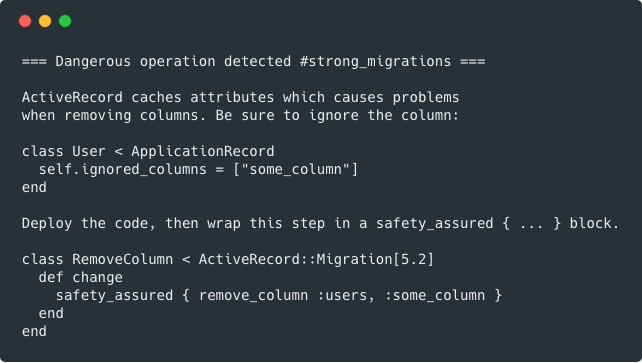

Removing a column

Bad

ActiveRecord caches database columns at runtime, so if you drop a column, it can cause exceptions until your app reboots.

class RemoveSomeColumnFromUsers < ActiveRecord::Migration[6.0]

def change

remove_column :users, :some_column

end

end

Good

- Tell ActiveRecord to ignore the column from its cache

class User < ApplicationRecord

self.ignored_columns = ["some_column"]

end

- Deploy code

- Write a migration to remove the column (wrap in

safety_assuredblock)

class RemoveSomeColumnFromUsers < ActiveRecord::Migration[6.0]

def change

safety_assured { remove_column :users, :some_column }

end

end

- Deploy and run migration

Adding a column with a default value

Note: This operation is safe in Postgres 11+.

Bad

Adding a column with a default value to an existing table causes the entire table to be rewritten.

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :some_column, :text, default: "default_value"

end

end

Good

Instead, add the column without a default value, then change the default.

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def up

add_column :users, :some_column, :text

change_column_default :users, :some_column, "default_value"

end

def down

remove_column :users, :some_column

end

end

See the next section for how to backfill.

Backfilling data

Bad

Backfilling in the same transaction that alters a table locks the table for the duration of the backfill.

class AddSomeColumnToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :some_column, :text

User.update_all some_column: "default_value"

end

end

Also, running a single query to update data can cause issues for large tables.

Good

There are three keys to backfilling safely: batching, throttling, and running it outside a transaction. Use the Rails console or a separate migration with disable_ddl_transaction!.

class BackfillSomeColumn < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

User.unscoped.in_batches do |relation|

relation.update_all some_column: "default_value"

sleep(0.1) # throttle

end

end

end

Adding an index

Bad

In Postgres, adding an index non-concurrently locks the table.

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

def change

add_index :users, :some_column

end

end

Good

Add indexes concurrently.

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

add_index :users, :some_column, algorithm: :concurrently

end

end

If you forget disable_ddl_transaction!, the migration will fail. Also, note that indexes on new tables (those created in the same migration) don’t require this. Check out gindex to quickly generate index migrations without memorizing the syntax.

Adding a reference

Bad

Rails adds an index non-concurrently to references by default, which is problematic for Postgres.

class AddReferenceToUsers < ActiveRecord::Migration[6.0]

def change

add_reference :users, :city

end

end

Good

Make sure the index is added concurrently.

class AddReferenceToUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

add_reference :users, :city, index: {algorithm: :concurrently}

end

end

Adding a foreign key

Bad

In Postgres, new foreign keys are validated by default, which acquires a ShareRowExclusiveLock that can be expensive on large tables.

class AddForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

add_foreign_key :users, :orders

end

end

Good

Instead, validate it in a separate migration with a more agreeable RowShareLock. This approach is documented by Postgres to have “the least impact on other work.”

For Rails 5.2+, use:

class AddForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

add_foreign_key :users, :orders, validate: false

end

end

Then validate it in a separate migration.

class ValidateForeignKeyOnUsers < ActiveRecord::Migration[6.0]

def change

validate_foreign_key :users, :orders

end

end

For Rails < 5.2, use:

class AddForeignKeyOnUsers < ActiveRecord::Migration[5.1]

def change

safety_assured do

execute 'ALTER TABLE "users" ADD CONSTRAINT "fk_rails_c1e9b98e31" FOREIGN KEY ("order_id") REFERENCES "orders" ("id") NOT VALID'

end

end

end

Then validate it in a separate migration.

class ValidateForeignKeyOnUsers < ActiveRecord::Migration[5.1]

def change

safety_assured do

execute 'ALTER TABLE "users" VALIDATE CONSTRAINT "fk_rails_c1e9b98e31"'

end

end

end

Renaming or changing the type of a column

Bad

class RenameSomeColumn < ActiveRecord::Migration[6.0]

def change

rename_column :users, :some_column, :new_name

end

end

or

class ChangeSomeColumnType < ActiveRecord::Migration[6.0]

def change

change_column :users, :some_column, :new_type

end

end

One exception is changing a varchar column to text, which is safe in Postgres.

Good

A safer approach is to:

- Create a new column

- Write to both columns

- Backfill data from the old column to the new column

- Move reads from the old column to the new column

- Stop writing to the old column

- Drop the old column

Renaming a table

Bad

class RenameUsersToCustomers < ActiveRecord::Migration[6.0]

def change

rename_table :users, :customers

end

end

Good

A safer approach is to:

- Create a new table

- Write to both tables

- Backfill data from the old table to new table

- Move reads from the old table to the new table

- Stop writing to the old table

- Drop the old table

Creating a table with the force option

Bad

The force option can drop an existing table.

class CreateUsers < ActiveRecord::Migration[6.0]

def change

create_table :users, force: true do |t|

# ...

end

end

end

Good

Create tables without the force option.

class CreateUsers < ActiveRecord::Migration[6.0]

def change

create_table :users do |t|

# ...

end

end

end

Setting NOT NULL on an existing column

Bad

In Postgres, setting NOT NULL on an existing column requires an AccessExclusiveLock, which is expensive on large tables.

class SetSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false

end

end

Good

Instead, add a constraint:

class SetSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

safety_assured do

execute 'ALTER TABLE "users" ADD CONSTRAINT "users_some_column_null" CHECK ("some_column" IS NOT NULL) NOT VALID'

end

end

end

Then validate it in a separate migration.

class ValidateSomeColumnNotNull < ActiveRecord::Migration[6.0]

def change

safety_assured do

execute 'ALTER TABLE "users" VALIDATE CONSTRAINT "users_some_column_null"'

end

end

end

Note: This is not 100% the same as NOT NULL column constraint. Here’s a good explanation.

Using change_column_null with a default value

Bad

This generates a single UPDATE statement to set the default value.

class ChangeSomeColumnNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false, "default_value"

end

end

Good

Backfill the column safely. Then use:

class ChangeSomeColumnNull < ActiveRecord::Migration[6.0]

def change

change_column_null :users, :some_column, false

end

end

Note: In Postgres, change_column_null is still not safe with this method.

Adding a json column

Bad

In Postgres, there’s no equality operator for the json column type, which can cause errors for existing SELECT DISTINCT queries.

class AddPropertiesToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :properties, :json

end

end

Good

Use jsonb instead.

class AddPropertiesToUsers < ActiveRecord::Migration[6.0]

def change

add_column :users, :properties, :jsonb

end

end

Optional Checks

Some operations rarely cause issues in practice, but can be checked if desired. Enable checks with:

StrongMigrations.enable_check(:remove_index)

To start a check only after a specific migration, use:

StrongMigrations.enable_check(:remove_index, start_after: 20170101000000)

Removing an index

Bad

In Postgres, removing an index non-concurrently locks the table for a brief period.

class RemoveSomeIndexFromUsers < ActiveRecord::Migration[6.0]

def change

remove_index :users, :some_column

end

end

Good

Remove indexes concurrently.

class RemoveSomeIndexFromUsers < ActiveRecord::Migration[6.0]

disable_ddl_transaction!

def change

remove_index :users, column: :some_column, algorithm: :concurrently

end

end

Best Practices

Keeping non-unique indexes to three columns or less

Bad

Adding a non-unique index with more than three columns rarely improves performance.

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

def change

add_index :users, [:a, :b, :c, :d]

end

end

Good

Instead, start an index with columns that narrow down the results the most.

class AddSomeIndexToUsers < ActiveRecord::Migration[6.0]

def change

add_index :users, [:b, :d]

end

end

For Postgres, be sure to add them concurrently.

Assuring Safety

To mark a step in the migration as safe, despite using a method that might otherwise be dangerous, wrap it in a safety_assured block.

class MySafeMigration < ActiveRecord::Migration[6.0]

def change

safety_assured { remove_column :users, :some_column }

end

end

Certain methods like execute and change_table cannot be inspected and are prevented from running by default. Make sure what you’re doing is really safe and use this pattern.

Custom Checks

Add your own custom checks with:

StrongMigrations.add_check do |method, args|

if method == :add_index && args[0].to_s == "users"

stop! "No more indexes on the users table"

end

end

Use the stop! method to stop migrations.

Note: Since remove_column always requires a safety_assured block, it’s not possible to add a custom check for remove_column operations.

Disable Checks

Disable specific checks with:

StrongMigrations.disable_check(:add_index)

Check the source code for the list of keys.

Existing Migrations

To mark migrations as safe that were created before installing this gem, create an initializer with:

StrongMigrations.start_after = 20170101000000

Use the version from your latest migration.

Dangerous Tasks

For safety, dangerous database tasks are disabled in production - db:drop, db:reset, db:schema:load, and db:structure:load. To get around this, use:

SAFETY_ASSURED=1 rails db:drop

Faster Migrations

Only dump the schema when adding a new migration. If you use Git, create an initializer with:

ActiveRecord::Base.dump_schema_after_migration = Rails.env.development? &&

`git status db/migrate/ --porcelain`.present?

Schema Sanity

Columns can flip order in db/schema.rb when you have multiple developers. One way to prevent this is to alphabetize them. Add to the end of your Rakefile:

task "db:schema:dump": "strong_migrations:alphabetize_columns"

Custom Messages

To customize specific messages, create an initializer with:

StrongMigrations.[:add_column_default] = "Your custom instructions"

Check the source code for the list of keys.

Postgres-Specific Features

Analyze Tables

Analyze tables automatically (to update planner statistics) after an index is added. Create an initializer with:

StrongMigrations.auto_analyze = true

Lock Timeout

It’s a good idea to set a lock timeout for the database user that runs migrations. This way, if migrations can’t acquire a lock in a timely manner, other statements won’t be stuck behind it. Here’s a great explanation of how lock queues work.

ALTER ROLE myuser SET lock_timeout = '10s';

There’s also a gem you can use for this.

Target Version

If your development database version is different from production, you can specify the production version so the right checks are run in development.

StrongMigrations.target_postgresql_version = 10 # or 9.6, etc

For safety, this option only affects development and test environments. In other environments, the actual server version is always used.

Additional Reading

Credits

Thanks to Bob Remeika and David Waller for the original code and Sean Huber for the bad/good readme format.

Contributing

Everyone is encouraged to help improve this project. Here are a few ways you can help:

- Report bugs

- Fix bugs and submit pull requests

- Write, clarify, or fix documentation

- Suggest or add new features

To get started with development and testing:

git clone https://github.com/ankane/strong_migrations.git

cd strong_migrations

bundle install

bundle exec rake test